This is probably the most important game I’ve ever played. It’s the most important game you will ever play. It touches on themes that are even more relevant now than 2019, the year it was released, in particular the uses of AI and it’s moral implications.

Gameplay

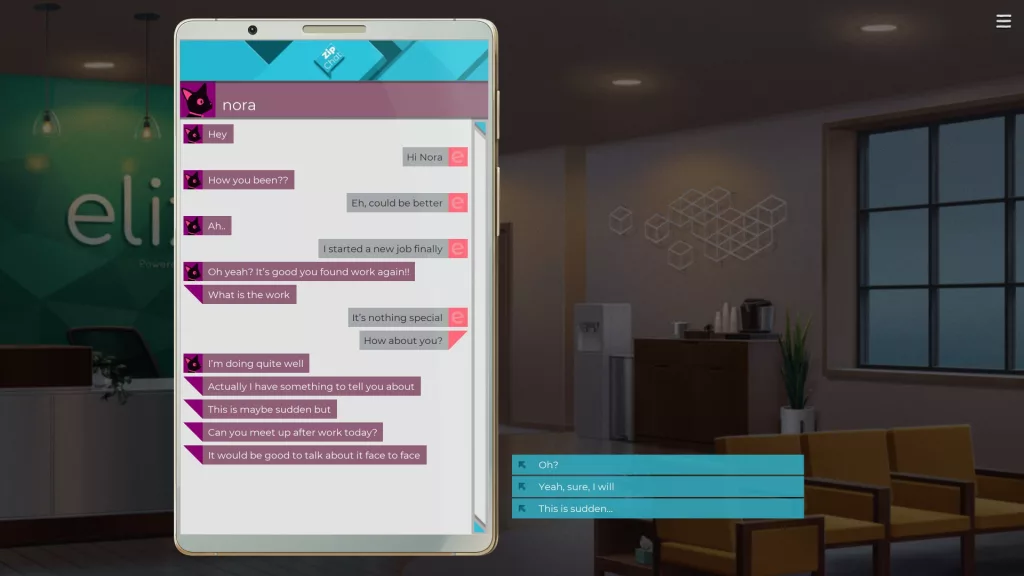

Eliza is not really a game in the traditional sense. It is a visual novel. To talk about the gameplay wouldn’t do it justice. You click through the story and make occasional choices about dialogue.

What choices you do have, at least early on in the game, have little effect on the overall story. As the game progresses near the end, your choices start to really matter.

You might read what I just wrote as a criticism. But it’s actually not. The interactivity (or lack of it) is actually a magnificent tool that helps draw you into the story of Eliza.

Story

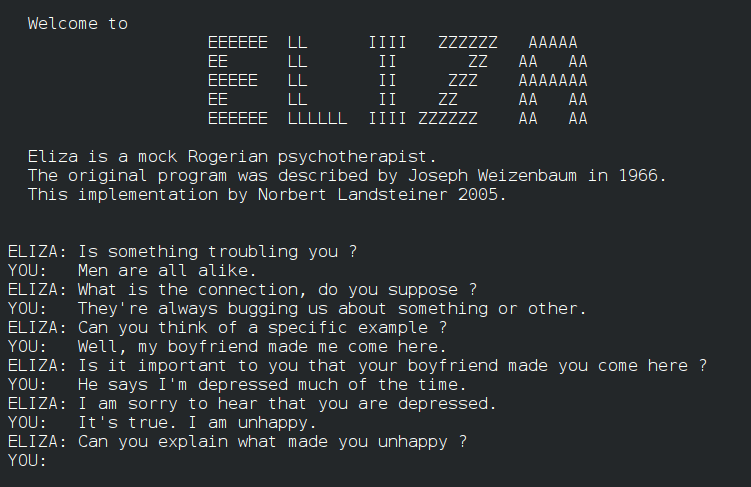

The story focuses on an application called Eliza. Named after an NLP (natural language processor) from the 60s, Eliza is an app designed to automate therapy. You play as Evelyn, who has just gotten a job as a “proxy”, a person who speaks for the app. The app listens to customers, tells the proxy what to say, and they say it.

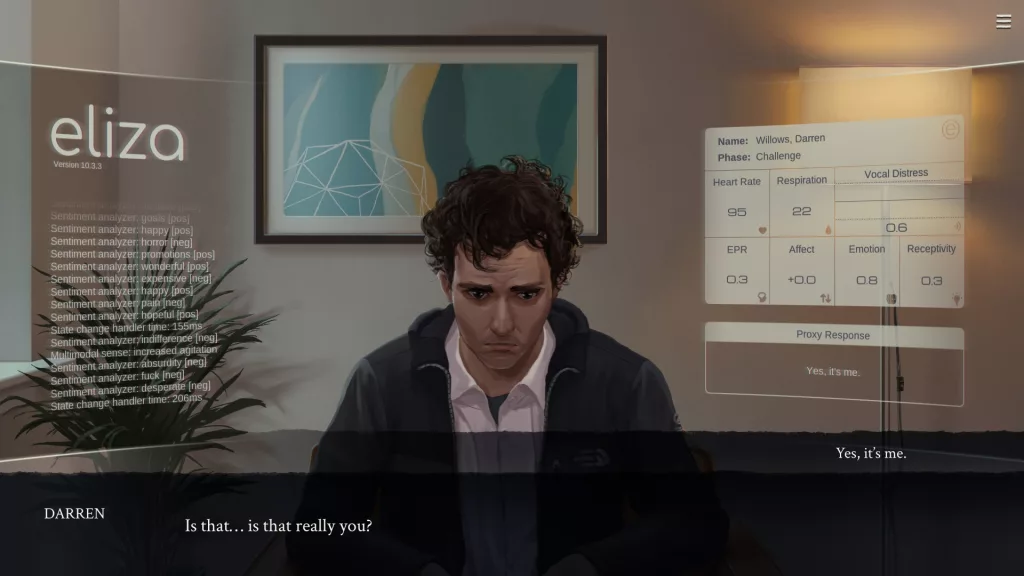

Before you have your first client, your manager reminds you to “stick to the script”. You go into the room with your first client and you start to say what Eliza tells you to. This is where both the interactivity and lack of choice shine. You have to click on the phrase Eliza gives you in order to say it. But there is only one option.

Through the magic of gameplay (or lack of it), the game communicates clearly that you have to say something, but you don’t have a choice in what you say. Most of your therapy sessions are like this, and as you work through more of them you start to understand how Eliza works. You learn its structure, and maybe start to see its flaws.

The first session you take is pretty intense. The client demands to stop talking to an AI. He angrily demands to speak to a human. Then it happens. The AI pretends to be Evelyn.

It’s a shocking moment, one that works because you have no choice.

Themes

The game touches on a themes that have become even more relevant in our post-Covid world. The rising demand for therapy. The need to end suffering. Privacy and handing data over to corporations. The automation of every aspect of our lives. The rise of AI that can write poetry. In 2019 these were close to science fiction concepts. Today, they are a reality.

It poses questions, big questions, but it doesn’t provide answers. The game provides a space for you to think about these things. If technology can be used for immoral deeds, should we still develop it? Can something as complex as therapy be automated? Should we end suffering, or is suffering what makes us human? Can AI create art and poetry, and when it does(has), is it really real art? What is the definition of consciousness?

Humanity

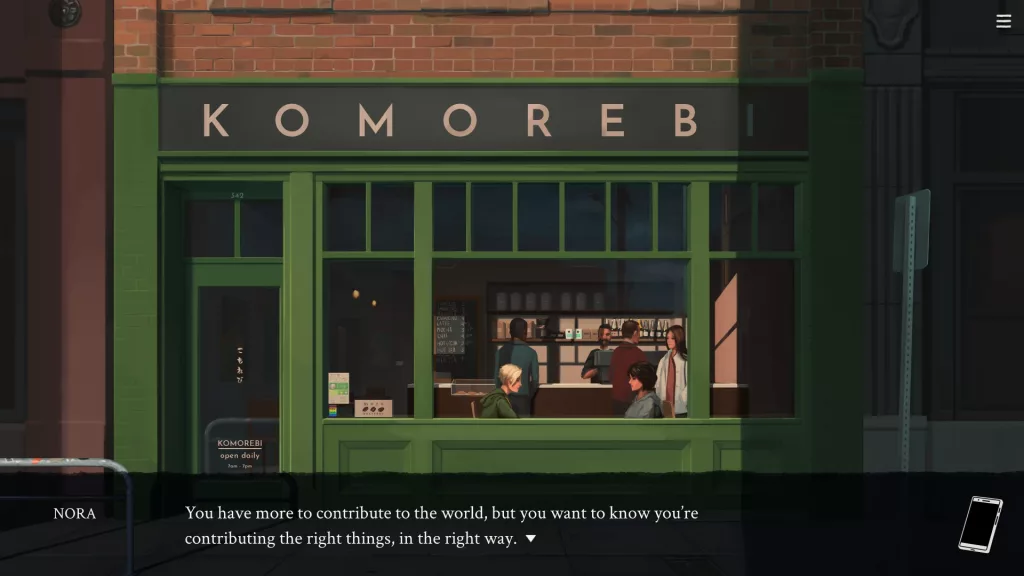

And yet, Eliza is a deeply human story. It poses all of these questions, while only allowing you to answer one. Where do I fit in all of this?

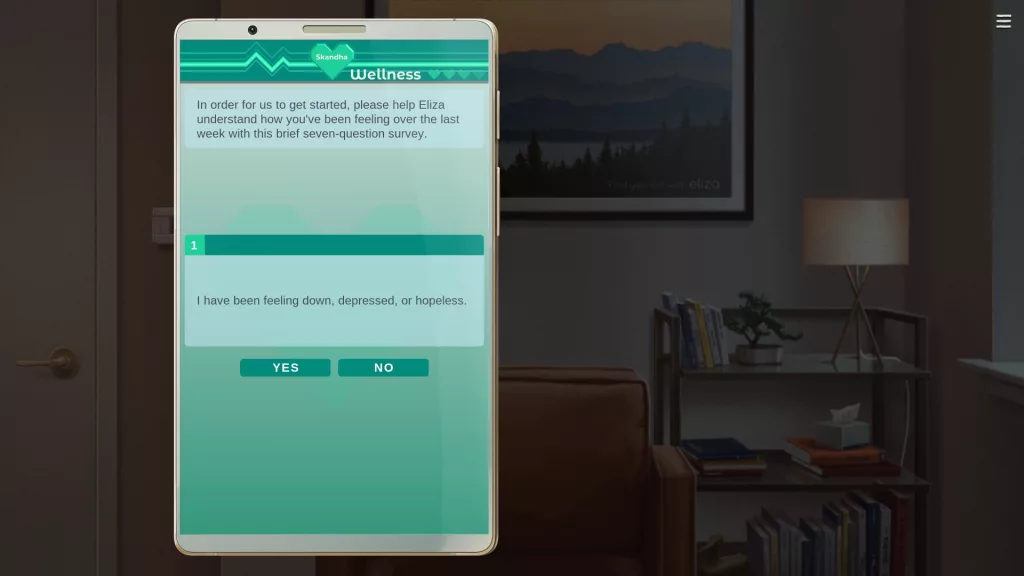

This is perhaps the most important question. Near the end of the game you are allowed more choice. As Evelyn, you go into a therapy session for yourself. You are asked questions you don’t know how to answer as Evelyn. So instinctually, you might end up answering for yourself. Suddenly, Evelyn becomes a proxy for yourself.

Eliza, the original Eliza NLP from the 60s, would simply parrot back what the user said as a question. In the game’s story, Evelyn is revealed to be one of the core developers on the new Eliza. Like the original Eliza, Evelyn saw the software as something that would become a mirror for their users.

This is what the game becomes. Just like Eliza, both the NLP and the therapy software, Evelyn becomes a mirror for you. I made the choices I thought would lead to the best ending for Evelyn. But the thought lingers in my mind. Was I choosing for her, or for myself?

Where do I fit into all this?

One thought on “Eliza: When (No) Choice Matters”

Comments are closed.